Presets versus quality in x264 encoding

2010-12-14 20:44

ssim

encoding

h.264

video

x264

I’m scoping a project that will require re-encoding a large training video library into HTML5 and Flash-compatible formats. As of today, this means using H.264-based video for best compatability and quality (although WebM might become an option in a year or two).

The open source x264 is widely considered the state of the art in H.264 encoders. Given the large amount of source video we need to convert as part of the project, finding the optimal trade-off between encoding speed and quality with x264-based encoders (x264 itself, FFmpeg, MEencoder, HandBrake, etc.) is important.

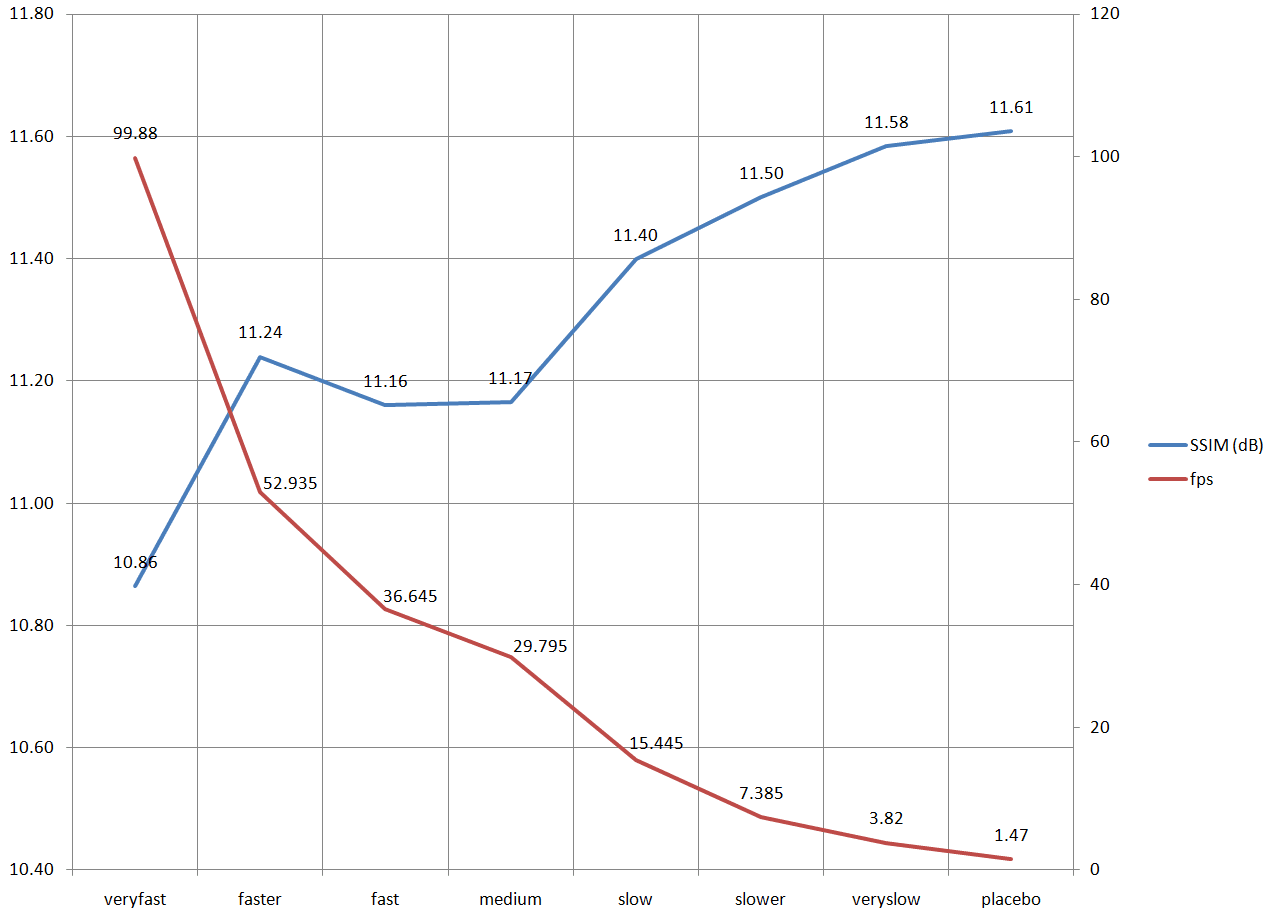

So I created a 720p video comprised of several popular video test sequences concatenated together. All of these sequences are from lossless original sources, so we are not re-compressing the artifacts of another video codec. The sequences are designed to torture video codecs: scenes include splashing water, flames, slow pans, detailed backgrounds and fast motion. I did several two-pass 2500 kbps encodings using the x264 presets distributed with the x264 command line encoder (version 0.110.1820 fdcf2ae). Excepting the “ultrafast” preset, which does not use B-frames and was dropped from the charts as an extreme outlier, all of the presets created files that varied by less than 0.2% in bitrate.

Here is the actual encoding command used in the test:

x264 --preset {presetname} -B 2500 --ssim --pass {1|2} -o {output}.mp4 input.y4m

The mean luminance SSIM (as reported by x264) was used as the objective quality metric in my tests. Yes, I know about the weaknesses of using arithmetic means for video metrics, and the benefits of box-and-whisker plots for showing variance between frames. However, a single number is quite illustrative, especially since we are testing the same basic codec at the same bitrate with marginally differing tunings. This was a quick-and-dirty test. If I find the time to get avisynth working correctly on my Windows 7 x64 machine I will update the plots to include variance information.

quality versus encoding speed

I was quite surprised that there was only a 0.75 dB difference in mean SSIM from the veryfast to placebo presets, despite placebo being 68 times slower than veryfast mode. I would have expected much more quality improvement for the CPU effort expended, given that placebo was producing just 1.5 fps on an eight-core machine. From a subjective standpoint, the results are indistinguishable to me, even going frame-by-frame through tough sections of the video.

Needless to say, all of my x264 encoding will now be done with medium preset or faster. Decoding a lossless source video for re-encoding became the bottleneck with medium presets or faster. If there is interest, please leave a comment, and I will find a hosting spot for the lossless, veryfast, and placebo versions of the video so others can compare, reproduce, or extend this simple test.

Comments:

[Anonymous]( “noreply@blogger.com”) -

oh. please do so

Polar Torsen -

Thanks for posting this! I was wondering for some time now, how much gain in visual quality there actually is between Medium and Slow presets. Guess I’ll just do all encodes in Medium mode from now on. Thanks again.

RPM -

While far from a scientific Mean-Opinion-Score study, we did ask our QA group (which is a collection of about a dozen internal folks with varying visual acuity) to judge the quality differences of some sample clips. Essentially, they as a group detected no differences whatsoever between medium and placebo settings at the same bitrate. This is in an office environment however; a large-screen movie-theater viewing could I suppose show some differences to the visually acute. But that was not our use case, and was not tested.

[Anonymous]( “noreply@blogger.com”) -

what were the size differences between the presets? did placebo for example generate a much smaller file?

RPM -

The bitrate was specified as 2.5 Mbps for all encodes, so there were no significant file size differences (less than 1 percent as I recall).

Now, if you do a fixed-quality encode, there will be a size difference. But in my tests the size difference is very small between veryfast all the way to placebo. There are outliers, though: ultrafast and superfast do seem to produce significantly larger file sizes when a fixed-quality rather than fixed-bitrate is used. But they are really, really fast - much faster than realtime encoding on my systems.

[Anonymous]( “noreply@blogger.com”) -

If there is interest, please leave a comment, and I will find a hosting spot for the lossless, veryfast, and placebo versions of the video so others can compare, reproduce, or extend this simple test.

Hi, really nice article. Could you please do so? Thanks.

RPM -

Unfortunately, too much time has elapsed, and I seem to have misplaced the original files. That said, I created them by concatenating lossless clips from the xiph.org archive using ffmpeg, resizing to 1920x1080p if needed. I believe I used the clips “aspen”,“controlled_burn”,“ducks_take_off”,“factory”,“old_town_cross”,“park_joy”,“red_kayak”,“snow_mnt”, and “touchdown_pass” in that order. As mentioned, these clips are a torture test for a video codec.

RPM -

FYI, the xiph.org test media archive is here: http://media.xiph.org/video/derf/

G Fichtner -

My experience has been about 50% smaller filesize with fixed quality. I took preset “IPAD” as the base (which Is medium x264) - then increased fixed quality from 20 to 23 and lowered x264 to placebo and resulted in ~ 50% smaller file sizes. I would really like to know how some of the other settings are though - because placebo works just like its name implies - very, very, slowly. Taking over 24 hours for 1 hour home videos.